Lost in Style: Gaze-driven Adaptive Aid for VR Navigation

Abstract

A key challenge for virtual reality level designers is striking a balance between maintaining the immersiveness of VR and providing users with on-screen aids after designing a virtual experience. These aids are often necessary for wayfinding in virtual environments with complex paths.

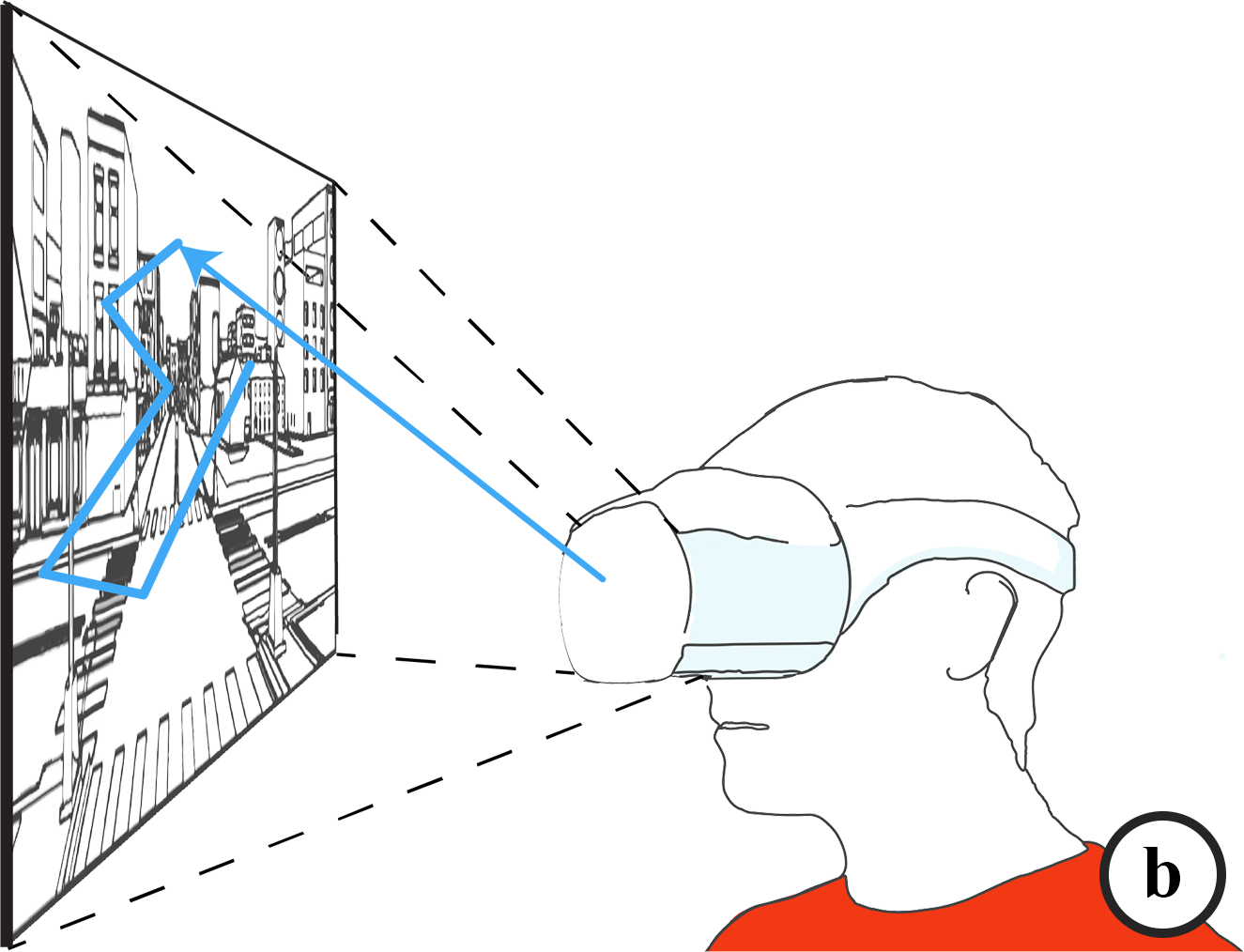

We introduce a novel adaptive aid that maintains the effectiveness of traditional aids, while equipping designers and users with the controls of how often help is displayed. Our adaptive aid uses gaze patterns in predicting user's need for navigation aid in VR and displays mini-maps or arrows accordingly. Using a dataset of gaze angle sequences of users navigating a VR environment and markers of when users requested aid, we trained an LSTM to classify user's gaze sequences as needing navigation help and display an aid. We validated the efficacy of the adaptive aid for wayfinding compared to other commonly-used wayfinding aids.

Keywords

Games/Play, Virtual/Augmented Reality, Eye TrackingMedia Coverage

- 2/6/2019 Japanese media Seamless VR: Article -

twitter (Japanese) -

twitter (English)

Publication

BibTex

@inproceedings{lostvr,

author={Alghofaili, Rawan and Sawahata, Yasuhito and Huang, Haikun

and Wang, Hsueh-Cheng and Shiratori, Takaaki and Yu, Lap-Fai},

title={Lost in Style: Gaze-driven Adaptive Aid for VR Navigation},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

series = {CHI '19},

year=2019,

publisher = {ACM},

location={Glasgow, UK},

keywords={Games/Play, Virtual/Augmented Reality, Eye Tracking}

}

Acknowledgments

We thank Kristen Laird for her help in conducting the user studies. This research is supported by the National Science Foundation under award number 1565978. We are grateful to the anonymous reviewers for their constructive comments.